During the past year, because of my band taking off, the game room was moved from the central room to the music room and the music room moved to the central room. There’s a lot more room for the guys to play and there’s sufficient room in the game room, formerly music room, to game. Currently I have a Shadowrun group that plays every Sunday. It does seem a touch tight but we’re really sitting around the table so it’s not terrible.

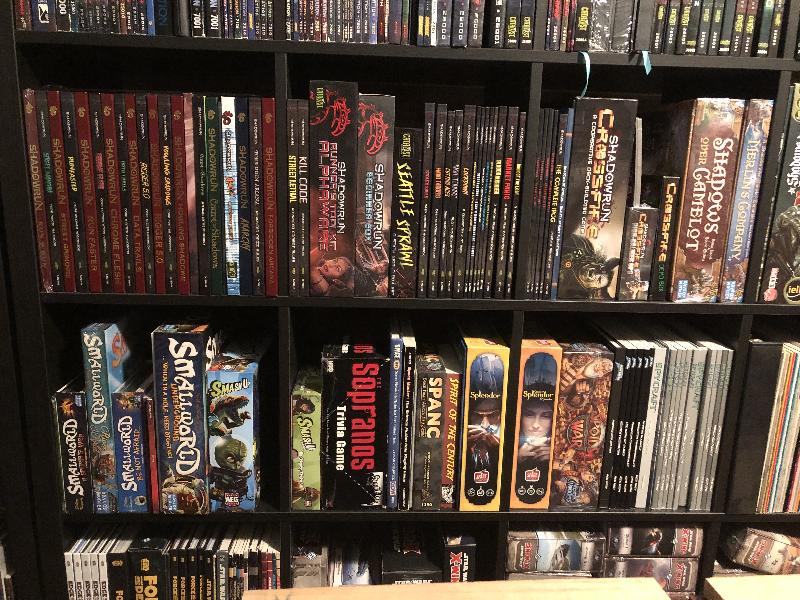

Of course, moving everything was a bit of a pain but I got it done in a week and the guys helped with the bigger Kallax shelves. Generally when I get new games, they’re stuck every which way on top of shelves or on the games in the shelves. Most recently, in preparation for the game room reorganize, I picked up a few more 1×4, 2×2, and 2×4 shelves to better organize the games. It does prove to show that games will fill every available space 🙂

I also took on the task of ensuring all my games were in my inventory. I also identified that I had found the game (there are about 15 or so that I haven’t been able to find since the move) and whether or not I’d played the game. I will remind folks who goggle at the list that it just shows that I’ve played the game, not how many times I’ve played it. Seeing a few hundred plays over 50 years doesn’t seem like a lot but taking into consideration dozens if not hundreds of plays for some games will put some perspective in the data 🙂

Since I updated the inventory, I can’t give you a definitive number of new games for the year. I’ve picked Gloomhaven as a starting point for 2018 as it was released in mid January. Since Gloomhaven, I’ve added 338 items to the inventory.

List of the RPG game system purchases:

* Shadowrun – The bulk will be fill-it-in digital purchases as my gaming group started this past summer and I wanted to have all the digital stuff ready for the group (I tend to play with a laptop and iPad vs a gigantic collection of books; but the books are available if needed).

* Dungeons and Dragons.

* Paranoia – I kickstarted the new Paranoia and hit NobleKnight to fill in my collection. I’d only picked up a few Mongoose books and needed to fill things in.

* Starfinder

* Star Trek – New system that looks interesting. I may spring it on the group at a later date.

* Conan 2d20 – I’m a big fan of Conan anyway so this was a must have

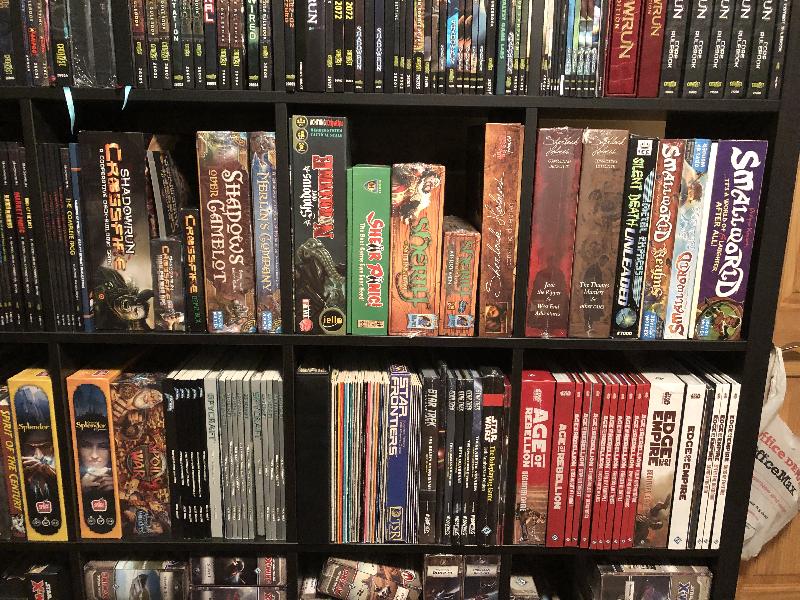

* Star Wars

* Pathfinder

* Genesys

That drops the list down to 83. List of the card game purchases and updates:

* Arkham Horror the card game

* Netrunner the card game

* Clank

* DC Deck Building

* Exploding Kittens

* Joking Hazard

* Munchkin

This brings down the number of board games to 57. Of those, actual new ones (vs hitting the used game store) are:

* Arboretum

* Betrayal Legacy

* Captain Sonar

* Cave vs Cave (Caverna)

* Chicago Express

* Dragon Castle

* Forbidden Sky

* Gloomhaven

* Founders of Gloomhaven

* Sanctum of Twilight (Mansions of Madness)

* Roll for the Galaxy

* The Rise of Fenris (Scythe)

* Shadowrun Zero Day

* Spoils of War

* Colonies (Terraforming Mars)

* Prelude (Terraforming Mars)

* Rails and Sails (Ticket to Ride)

* Triplanetary (Kicktarter)

* Green Hoarde (Zombicide)

With everything else, we only got to play a few of the above games (bolded). My band had its first gig in August so we were practicing hard 🙂 I’ve also spent more time on my Shadowrun group and especially the program I created and have been frantically updating for Shadowrun 5th Edition.

Some of the Reddit Boardgaming questions answered here:

How long have you been involved in the hobby?

Since I was young so about 50 years.

What would you change about your collection if you could?

Tough question. I get games because I’ve played them with others or they’re recommended. Since it’s generally just Jeanne and me, more two player games would be optimum. The only real change would be paying more attention to the number of players for the game. The Thing is an awesome game but really needs 6 or so players for it to work.

Which games might be leaving my collection soon?

Leave? You can’t leave me. None actually. I went through a phase back in the mid 90’s where I almost sold off what I had but the company (since bought by Noble Knight) only offered me pennies on the dollar (and only a few) and I had to ship it to them killing even more so I basically just kept them while I got more into video games. Now I’m glad I kept them, if only for nostalgia.

What haven’t I played?

Well, most of the games I bought before 2006 I’ve played. I gradually picked up more games between 2006 and 2012 and then really started exploring board games. I also have a pretty large collection of RPG books mainly because I used them for ideas for my AD&Dr1 game I ran for many years. In checking my game report, as of right now:

* Board Games: Inventory: 361, Played: 156, Percentage Played: 43.21

* Card Games: Inventory: 107, Played: 46, Percentage Played: 42.99

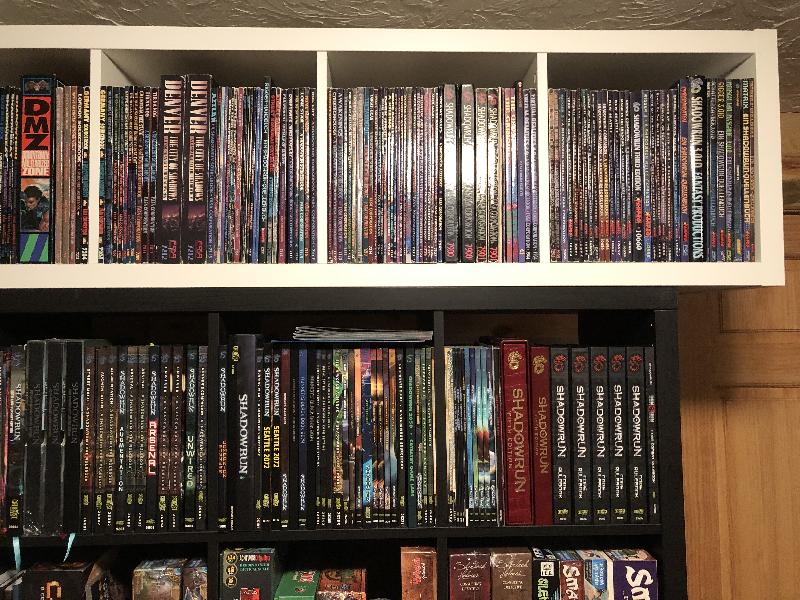

* Role Playing Games: Inventory: 276, Played: 31, Percentage Played: 11.23

The RPG Games stats are a bit misleading as well since I generally have multiple copies of core rule books for use the table or for various editions. For instance, I have probably 25 Shadowrun core books from 1st Edition up through 5th Edition. Same with D&D. I have several DM’s Guides from 1st Edition up through 5th Edition. Both include Collectable editions (the numbered Shadowrun books for instance) and even a few books for different languages. I have a Spanish and German Shadowrun core book.

In looking at my report, including every game, module, and expansion, I have 3,005 items. This doesn’t include dice though which exceed that by at least another 1,000 🙂

What are your favorite games?

This shifts so much that it’s hard to pin down. Cosmic Encounters, Car Wars, Shadowrun, Ticket to Ride, Splendor, Red Dragon Inn, Elder Sign, Eldritch Horror, Netrunner, Castles of Burgundy, Pandemic Iberia, Bunny Kingdom, DC Deck Building, the list goes on.

Favorite Boardgame of the past year?

I’d say Ticket to Ride Rails and Sails was the one we enjoyed playing the most. We introduced it to several others who have also purchased the game.

Most played boardgame?

Probably something like Cosmic Encounters outside something like Risk or Monopoly 🙂

What is your least favorite game?

That’s a tough question in that most games are somewhat fun and/or interesting. Probably the one that disappointed me most was Fragged. It’s a Doom video game set to board game and really doesn’t translate well. We played it once and decided we’d rather play the video game 🙂

I will say that we did try several of the Legendary card games and really had a hard time playing them. I particularly like Big Trouble in Little China but the Legendary game we played just killed the game itself for us and I haven’t picked up another since then.

Next boardgame purchase?

No real plans on a specific board game. I mainly look forward more to the next Shadowrun book.

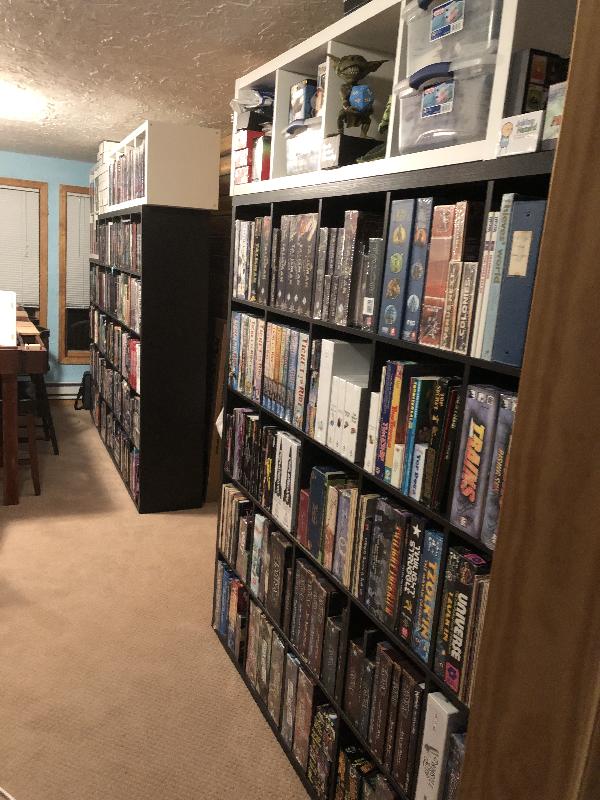

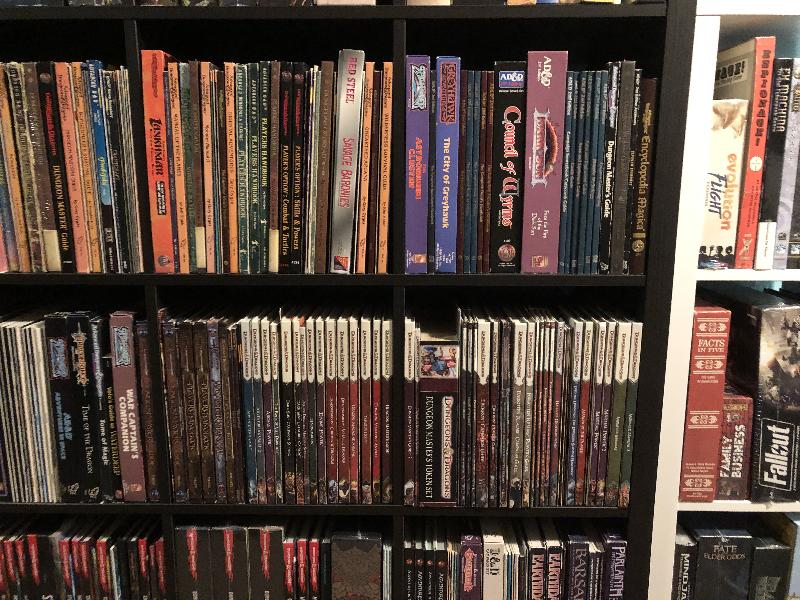

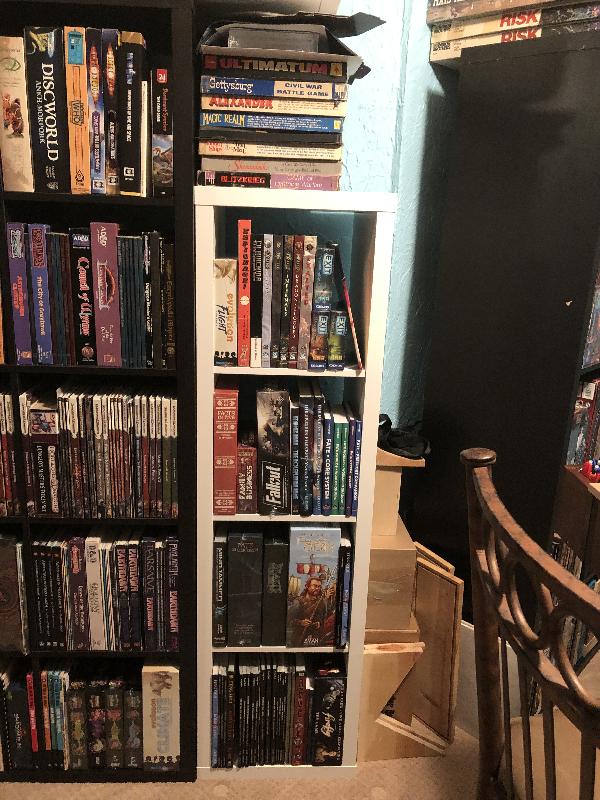

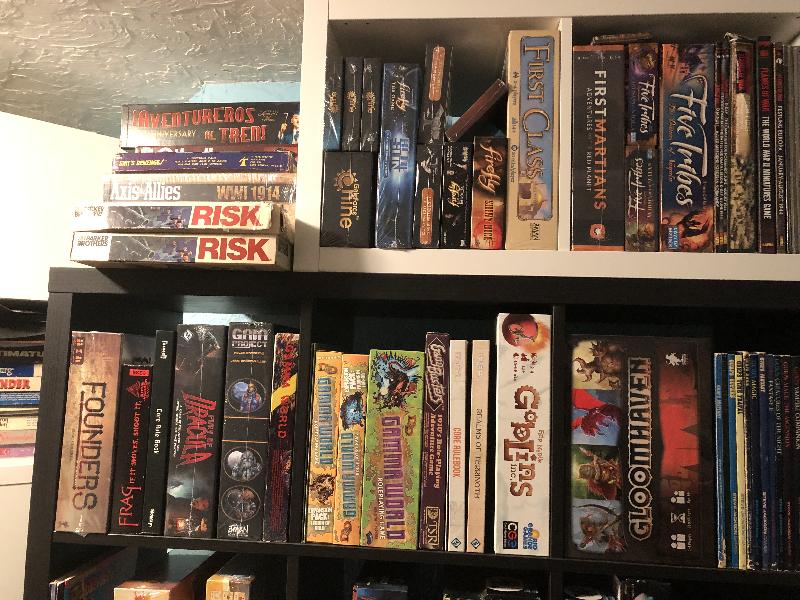

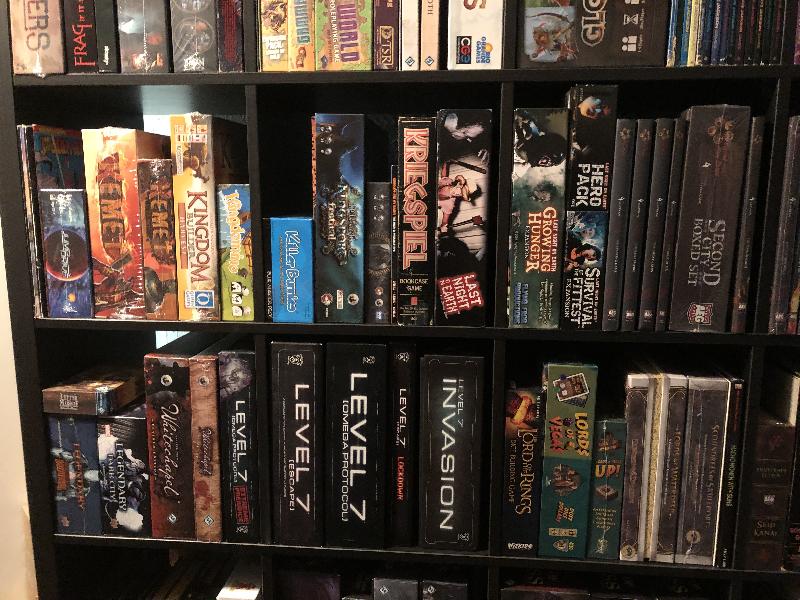

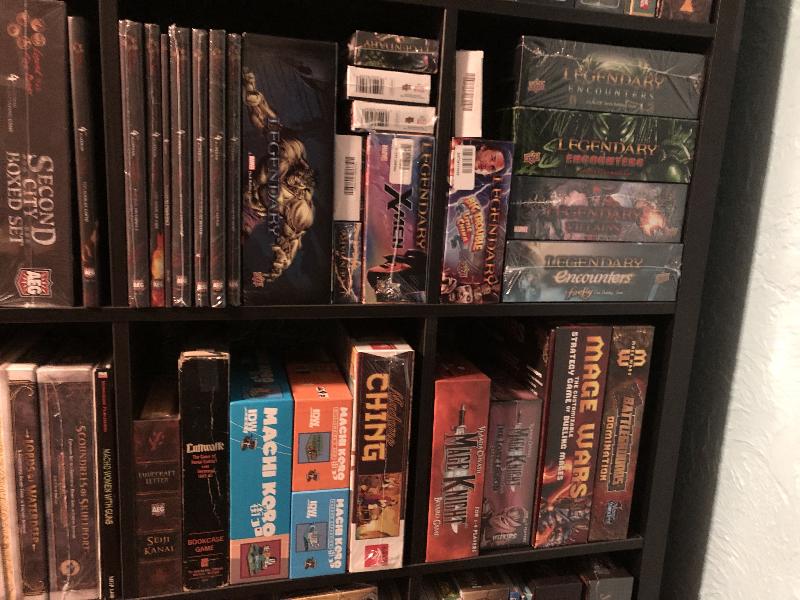

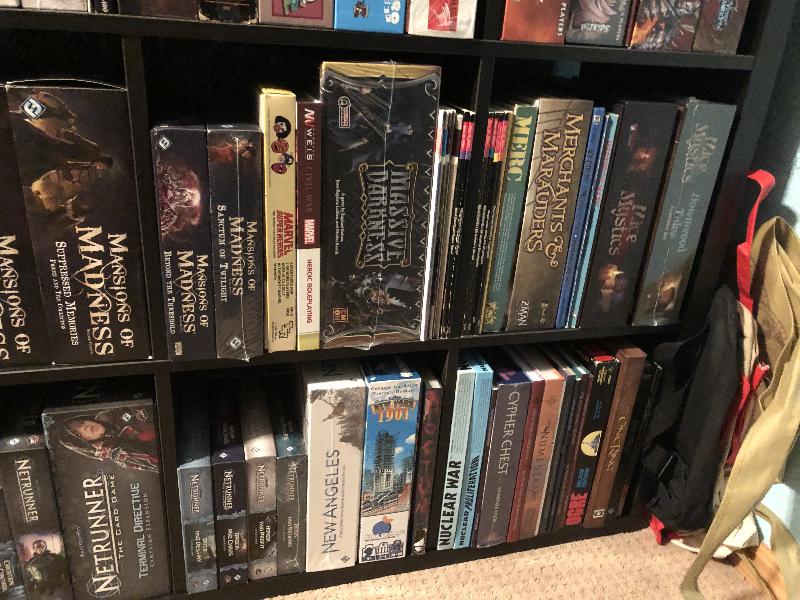

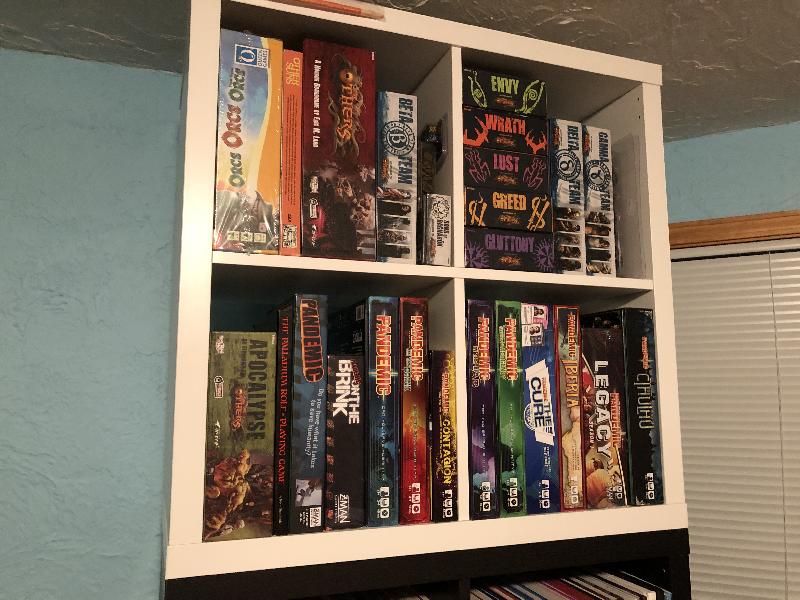

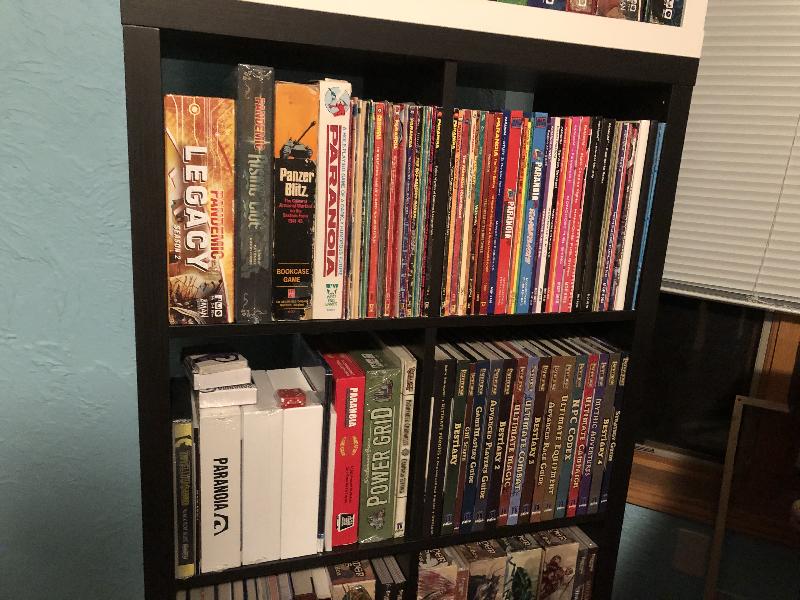

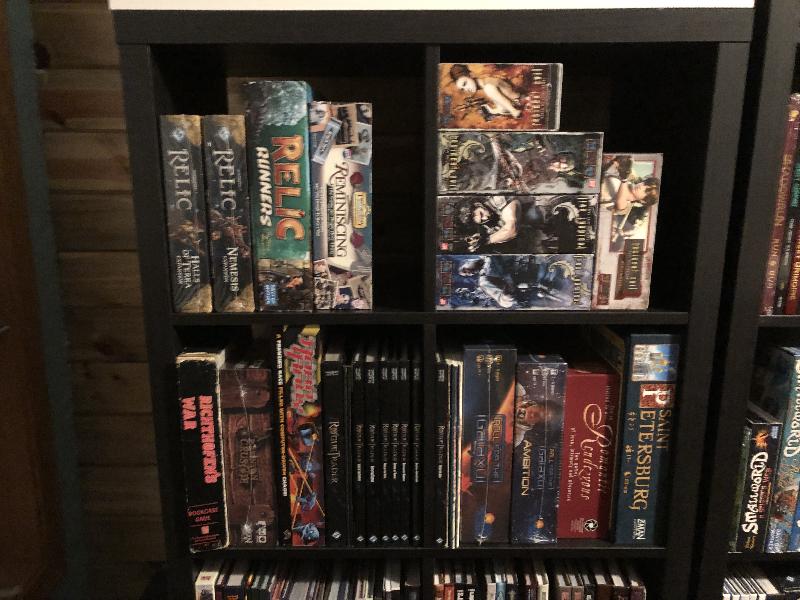

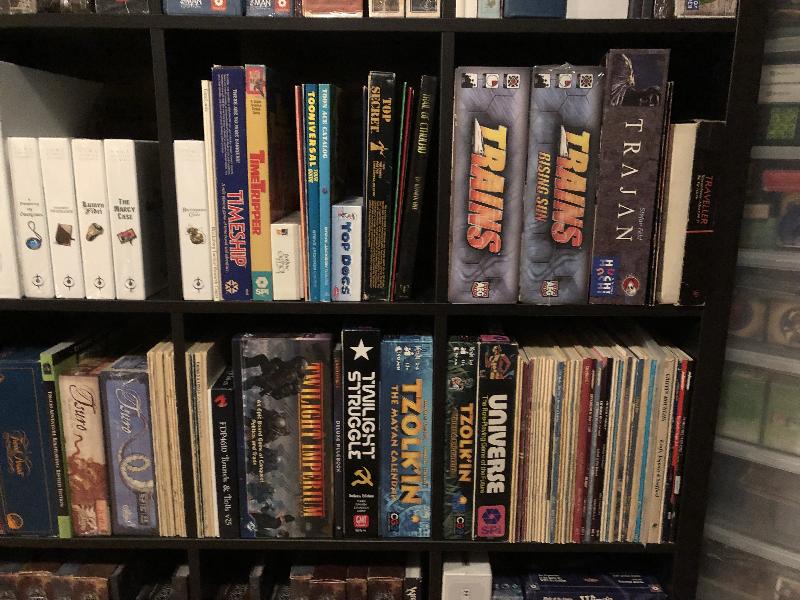

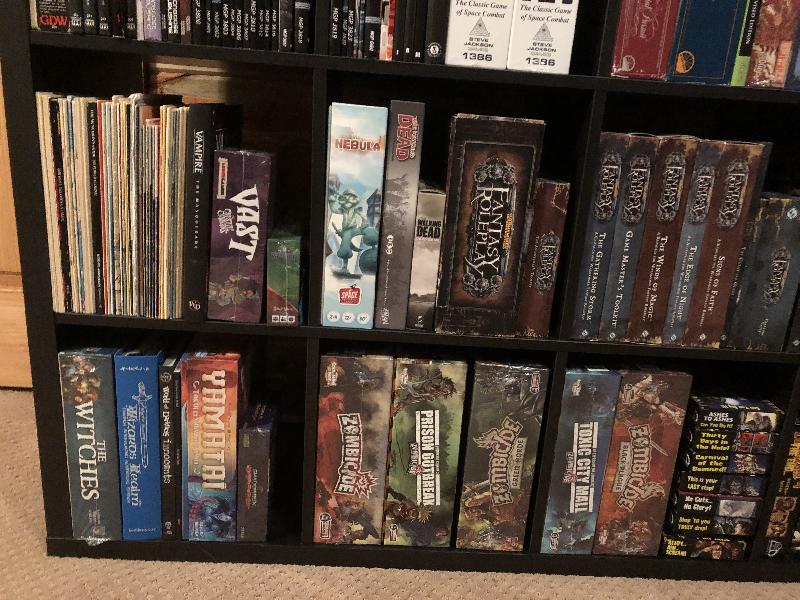

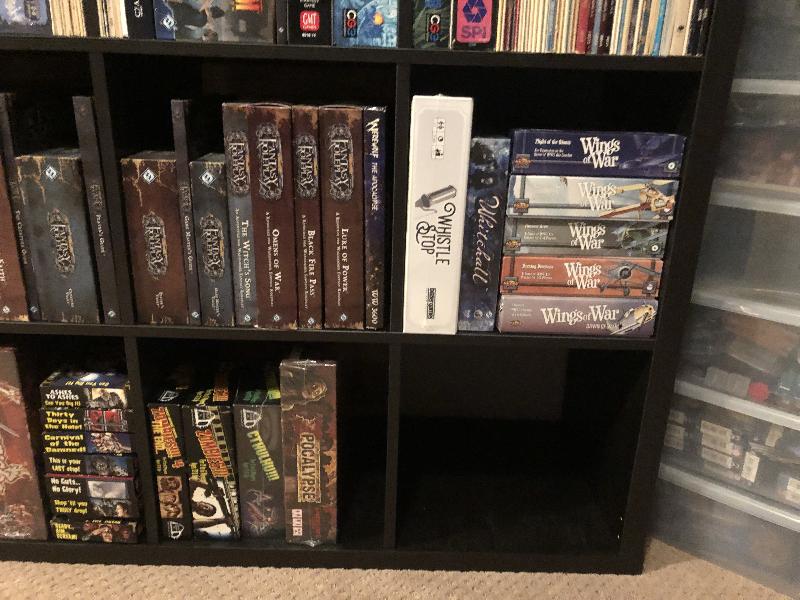

On to the pictures! I have 4 5×5 Kallax shelves. Three 2×4 Kallax shelves. Two 2×2 Kallax shelves. And 5 1×4 Kallax shelves. That’s 152 Kallax squares (not all filled though). Pictures are basically 3 or 4 squares wide and two shelves high so there are a lot of pictures.

Full Gallery: Photo Gallery (Note that you can click on the pics here to see the full size pic.)

Come on in!

It is a bit narrow but it’s a game room. As long as tables and such fit, it should be good.

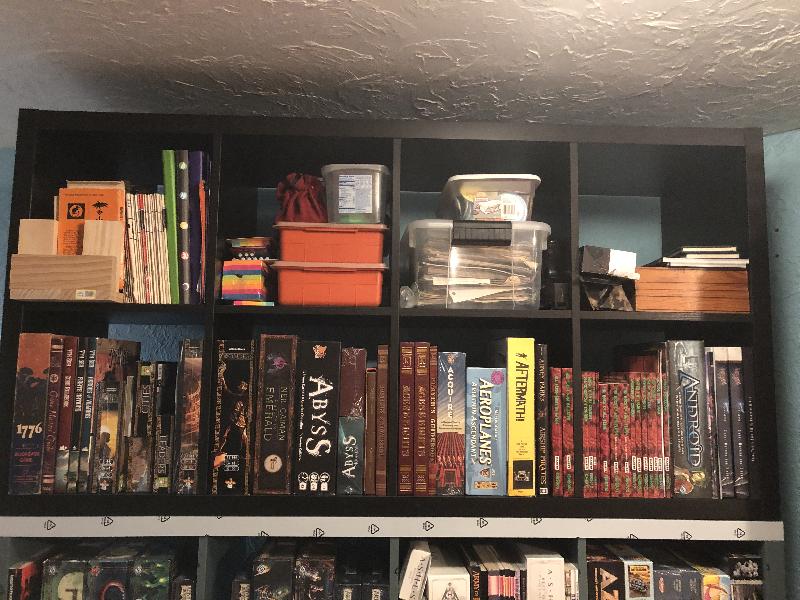

The top row here are some miscellaneous stuff including on the right, my Dad’s old chess set. This is the first shelf on the left as you enter the room.

Next shelf.

This is a side shelf to the second shelf. Just trying to make us of the space.

You can’t really see this shelf from the door due to the angle of the room.

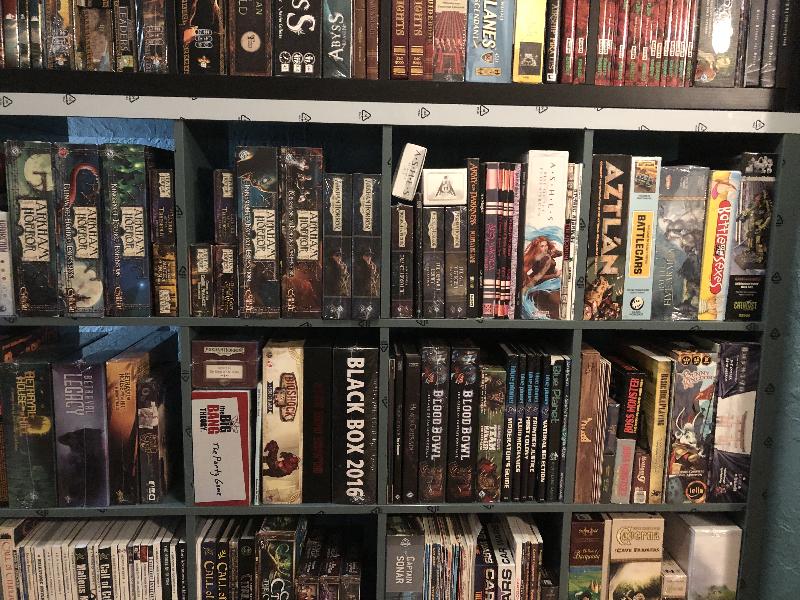

One of the 2×4 + 2×2 shelves.

And the other 2×4 + 2×2 shelf.

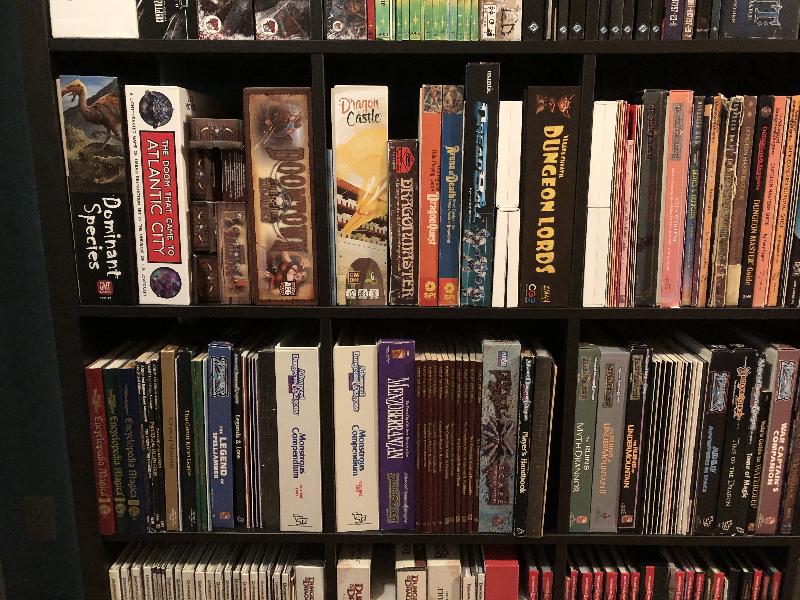

This unit is one I might push the top shelf back a little. Lots of RPG books in this one so it’s a bit heavier than the others. That’s generally my Netrunner, Shadowrun, Arkham Horror, and Force of Will cards on the left.

And the final bookshelf. The top here also has miscellaneous stuff. Card decks I haven’t put into the above deck boxes, Cards Against Humanity, etc. On the right are a few more card decks including my Xxxenophile deck.

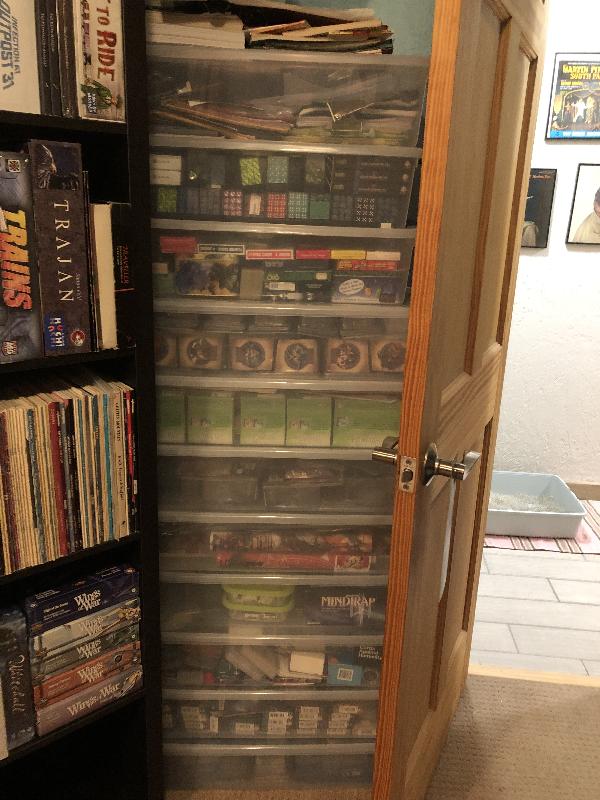

And behind the door, are my boxes for other miscellaneous stuff. Dice, card games, miniatures, Wings of War planes, playmats, etc.

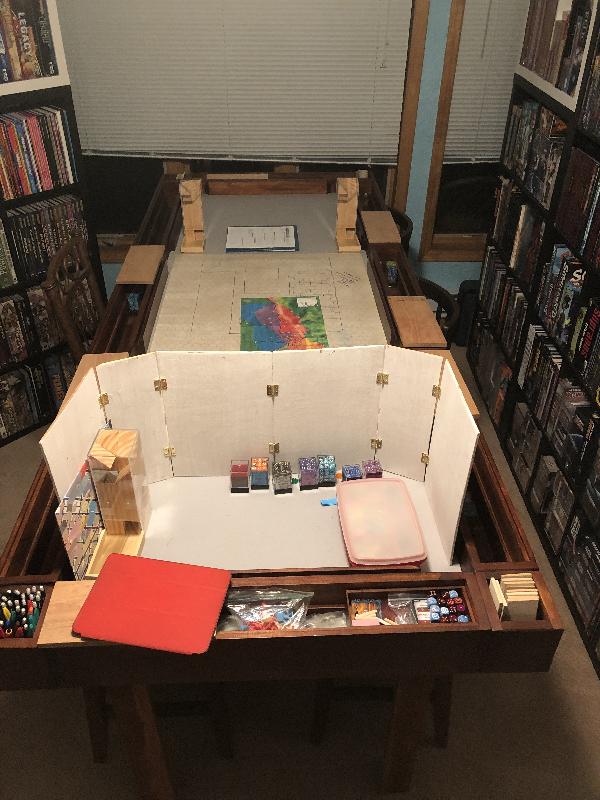

And finally, my gaming table. Currently set up for Shadowrun. I have a second table I’m considering setting up for regular board gaming.

And that’s the list.